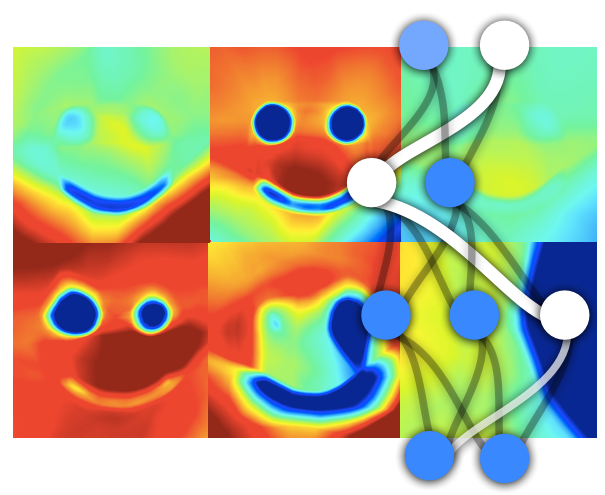

Description: This is a course introducing modern techniques of machine learning, especially deep neural networks, to an audience of physicists. Neural networks can be trained to perform many challenging tasks, including image recognition and natural language processing, just by showing them many examples. While neural networks have been introduced already in the 50s, they really have taken off in the past decade, with spectacular successes in many areas. Often, their performance now surpasses humans, as proven by the recent achievements in handwriting recognition and in winning the game of ‘Go’ against expert human players. They are now also being considered more and more for applications in physics, ranging from predictions of material properties to analyzing phase transitions.

Contents: We cover the basics of neural networks (backpropagation), convolutional networks, autoencoders, restricted Boltzmann machines, and recurrent neural networks, as well as the recently emerging applications in physics. We also cover reinforcement learning, which permits to discover solutions to challenges based on rewards (instead of seeing examples with known correct answers). In the end, we describe some general thoughts on future artificial scientific discovery. We present examples using the ‘python’ programming language, which is a modern interpreted language with powerful linear algebra and plotting functions. In particular, we use the “keras” python package that allows to very conveniently implement neural networks with only a few lines of code (using the library “theano”, or, alternatively, “TensorFlow”).

Prerequisites: As a prerequisite you will only need matrix multiplication and the chain rule, i.e. the course will be understandable to bachelor students, master students and graduate students. However, knowledge of any computer programming language will make it much more fun.